Today we are going to discuss a very well-known and insightful problem - Josephus Problem. The solution we will discuss utilizes recursion. So, some underlying complexity of recursion will also be cleared.

The Story

The problem starts with a story. Yosef ben Matityahu, as Josephus was known to the Jews, was born in the

Rome-occupied Jerusalem in 37 CE. He became a community leader and an army

commander during the Roman invasion of Israel, which ultimately resulted

in the destruction of the 2nd Beit Hamikdash. During his defense of the

Galilee region, Josephus and his 40 Jewish soldiers were trapped in a

fortress surrounded by the Roman army. They knew that capture was not an

option, as they would be tortured and their bodies mutilated, so they

decided that death was the only option. But, there was another problem. In

Jewish law, it is better to kill someone than to commit suicide. So,

together they devised a system where all the soldiers got in a circle, and

each soldier killed his comrade to the left of him (in succession) until

there would be one soldier left who would commit suicide. This plan would

minimize the number of suicides in an organized manner, thereby solving

their initial problems. However, Josephus had a different plan in mind: he

wanted to survive and turn himself into the Romans. So, knowing that he

couldn’t run away since his soldiers would turn on him, he had to

calculate which place in the circle he had to be to survive this “circle

of death.”

There were 41 people in the circle, and Josephus

correctly calculated that to survive, he would have to be number 19.

Josephus’ plan worked, and after turning himself in to the Romans, he

became a famous historian by the name of Josephus Flavius and lived to

tell this incredible story. Since then, this story has become a famous

math problem. Many have wondered how Josephus knew which spot in the

circle to be in and whether there is a formula to consistently determine

which spot will survive given any number of people.

The Problem Statement

So, that's the problem to be solved. For a more generalized case, we would consider the number of soldiers is n, and each one would kill the one, who is k persons left to him. The problem statement without all the killing and with some sparkling of math will be something like:

We are given the natural numbers n and . All natural numbers from to are written in a circle. First, count the k-th number starting from the first one and delete it. Then k numbers are counted starting from the next one, and the k -th one is removed again, and so on. The process stops when one number remains. It is required to find the last number.

Approaching the solution

Let's assume a function, joseph, that gives us the person's position to be killed in the next turn. Our function joseph(n, k) would take the value of n and k as input. This function would internally utilize the killer's position to kill this next person, and that's where the recursion comes in.

So, as the sword is passed to the next person of the last killed one. So we would get the next killer if we get the killed person of the last call, and add 1 to it, which would be something like the result from the previous call of the function +1, which should be the output of the next call.

As we know, for most of the recursion problems, we have to take a base case. Let's take a case where n = 1. That means we are considering the beginning, where person 1 was the killer, thus the best case.

But who would have the first killer(person 1 ) killed? Obviously, the k-th next person to him, person k. Again, if person 2 was the killer, who would he have killed? Person K+1 for sure.

Then how do we get the killed person from the position of the killer? 1 + (k-1) = k and 2 + (k-1) = k+1. Hmm...So adding (k-1) would do the trick. So until now, what we got is, our function will return the next person to be killed, so we would get the last standing person at the final call. And how will it predict the next to be killed? Somehow It will get the last killer. Add (k-1) to it to get the last killed person. And as the sword will be passed to the next person of the last killed person (who is also the next killer as he has the sword), we will find him out by adding 1 to the last killed person and return it as function output.

Feeling a little lost? Don't worry... the first time also happened to me when I thought about it. Just Take a deep breath.. and again read the last past. And now it would be more clear as we are going to write the pseudocode.

function joseph (n, k) {

return ( last killed person from last function call + (k-1) ) + 1

}

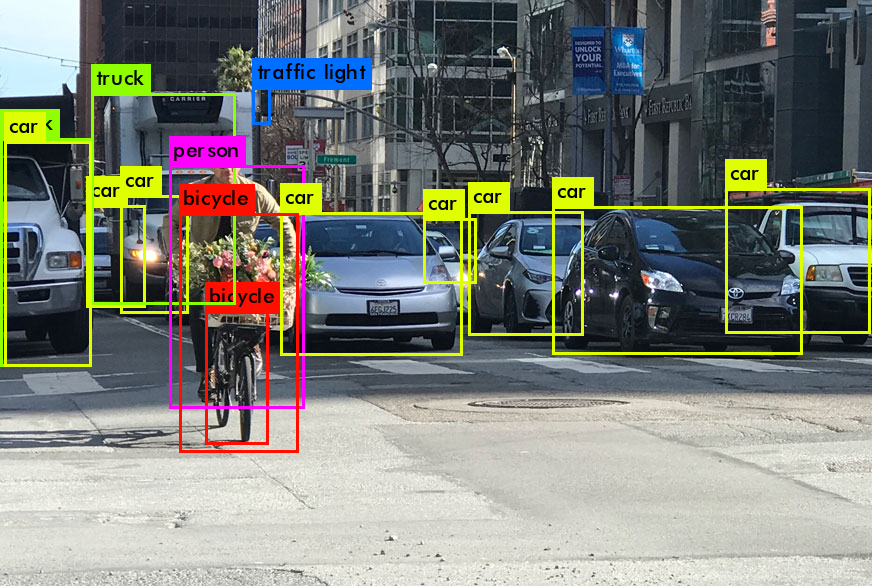

Now just one problem. Look at the picture I provided. As there is no person after 6, the loop passes through the beginning of the array. Let me give an example. Suppose Person 6 was our next killer. Who is he going to kill? From the previous code we see, we will get person 8 as the killed one. As there is person 1 beside person 6, he would kill person 2 if it was his turn.

So we have to come back to the beginning after passing through the end. And who is better than modulus to do the task?

So the modified pseudocode will be,

function joseph (n, k) {

return ( last killed person from last function call + (k-1) ) mod n + 1

}

Great!! We are almost done, Just to figure out what the internal function call would be. As every time a person gets killed, one person is reduced from the population(n-1), but the number of persons we jump over(k) remains constant.

The solution

the final recursive function to solve the Josephus problem,

function joseph (n, k) {

return ( joseph(n-1, k) + (k-1) ) mod n + 1

}